Jul 2023

24 Mon

25 Tue

26 Wed

27 Thu

28 Fri

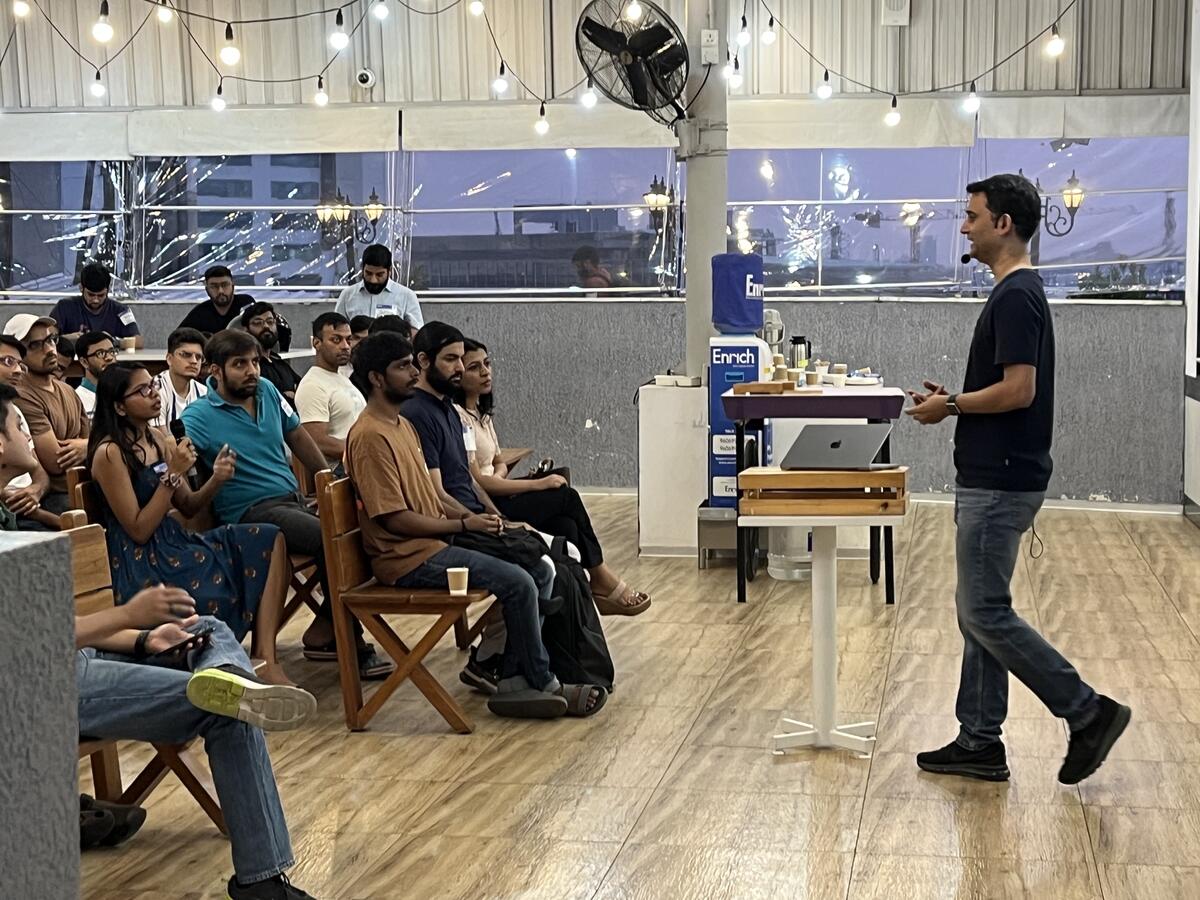

29 Sat 05:00 PM – 07:00 PM IST

30 Sun

Submitted Jul 20, 2023

Abstract

In recent times, Live Streaming platforms are gaining popularity where live content is being shown to users. Typically, the videos created by the creators range from 15 minutes to an hour. After intensive research, it was found that a sizable chunk of users drops within first 30 seconds of the video. Another piece of research shows that, on average, a user only has an attention span of 30 seconds. And this number is even lower in Gen Z, which is our main target audience. To solve this problem, we would want to identify the juiciest segments from videos as well as add external features that would prompt a user to land on the base video, overall increasing user engagement and jazziness of the video. Also, we want to make a customizable framework that would cater not only to snippets but also trailers, mashups, etc.

Literature Review

To solve this problem, we did extensive research on the tools that already exist on the market to solve it. When searched globally, there is no single tool or solution that aims to solve this. There are several solutions that try to tackle this in bits and pieces, but not fully. We then read some research papers on how we can do this end-to-end, and from here we got a couple of ideas to try.

Approach

We broke our solution into two parts: how to get the base snippet (the juiciest part within the videos) and what are the different post-processing techniques that we can apply to it. To summarise our solution,

Base snippet:

Post processing:

Impact and Future Work

We deployed our solution at scale (500 video snippets per day) in India. We saw a staggering increase of close to 80% in overall time spent and user engagements. As next steps, we are planning to scale this solution to Indonesia and then to the US. We are also aiming to create a new feed just for these videos. We will also be focusing on further improvements, both in base snippets and post-processing.

Hosted by

Supported by

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}