Jul 2023

24 Mon

25 Tue

26 Wed

27 Thu

28 Fri

29 Sat 05:00 PM – 07:00 PM IST

30 Sun

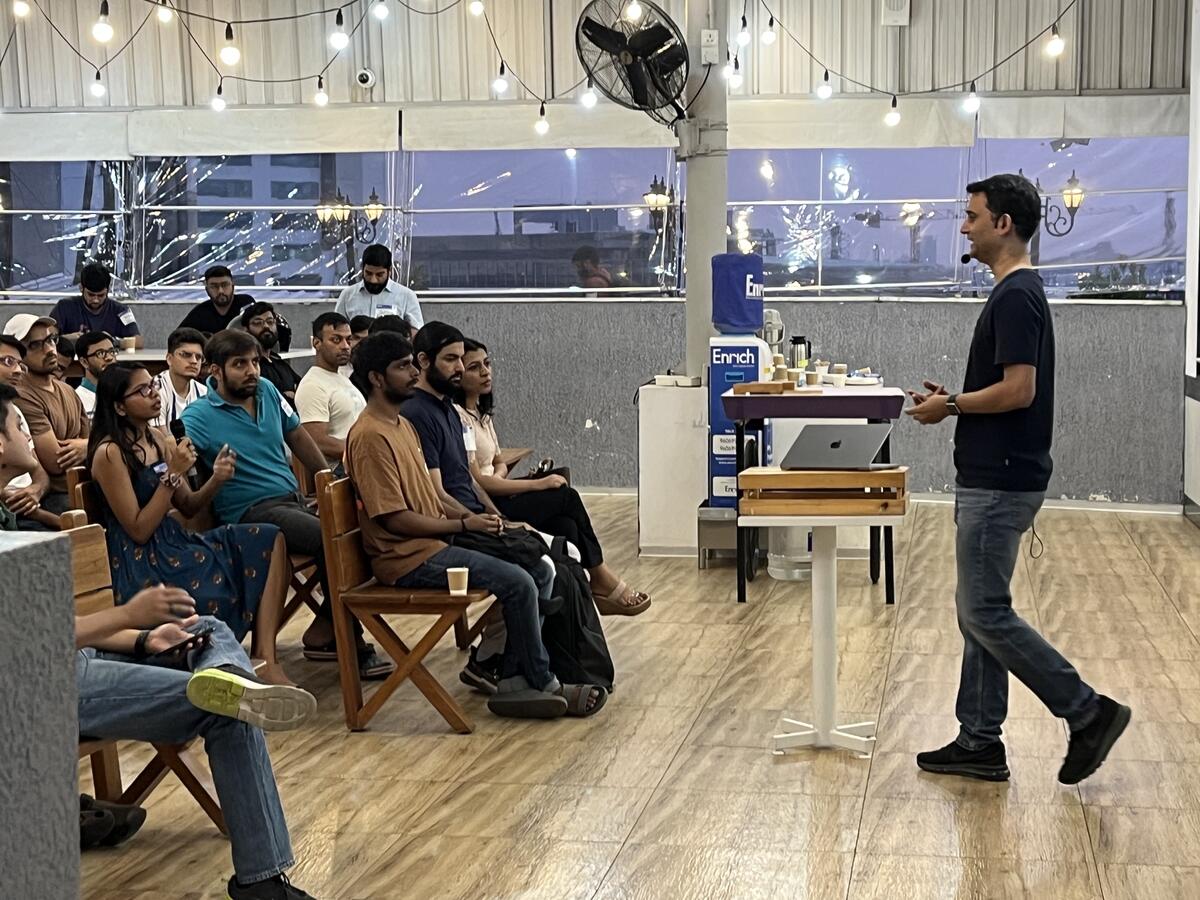

Sachin

@sachindh

Submitted Jul 9, 2023

Three years ago, OpenAI’s GPT-3 model created a buzz in the Machine Learning community and garnered some media attention. However, it had limited impact on regular users. In contrast, the launch of chatGPT two and a half years later became a viral sensation. This was due to the user-friendly and enjoyable experience it provided. In this talk, we’ll explore model finetuning approaches that contributed to the excitement around chatGPT. We’ll cover when and who should do this finetuning and give a quick overview of notebooks used to customize Large Language Models- LLMs for specific purposes.

Developing a system like chatGPT involves three stages: pretraining the base language model, supervised finetuning, and finetuning with Reinforcement Learning. The first stage is crucial for good performance, but it’s expensive ($100,000 - $10,000,000) and requires deep expertise. On the other hand, finetuning existing models is cheaper ($100 - $1,000) and easier. With the availability of many open source large language models, we’ll show hackers how to customize them for their specific needs. We’ll also discuss different situations and the corresponding finetuning strategies.

This talk is mainly geared towards Machine Learning engineers, as we will go through some code snippets. However, we will also discuss high-level concepts to make it useful for Generative AI enthusiasts.

Personal Bio:

Slides of the talk are at- https://docs.google.com/presentation/d/1Unw6GsoCHA2KmFMHOOhenamU3Do1VflMVksbmAancLs/

Hosted by

Supported by

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}