Jul 2023

24 Mon

25 Tue

26 Wed

27 Thu

28 Fri

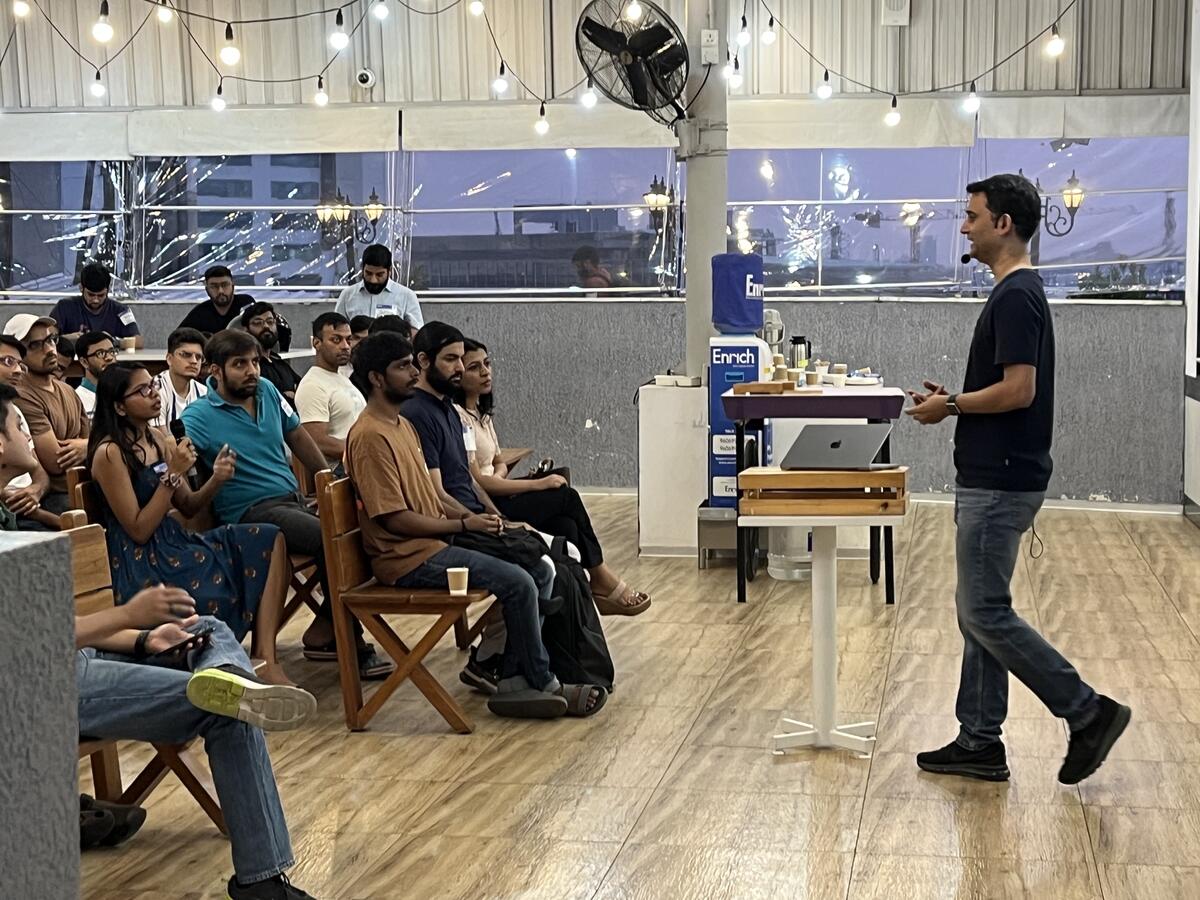

29 Sat 05:00 PM – 07:00 PM IST

30 Sun

Submitted Jul 6, 2023

Recommendation systems with large language models (LLMs) have the potential to solve the cold start problem, which is the challenge of recommending items to users who have not yet interacted with the system. LLMs can learn to represent users and items in a way that captures their latent preferences, even if they have not interacted with the system very much. This allows LLMs to make recommendations to new users that are more likely to be relevant to their interests.

However, the cold start problem is not completely solved with LLMs. LLMs still need to be trained on a large dataset of user-item interactions in order to learn to represent users and items effectively. This can be a challenge, especially for new services that do not have a lot of user data. Additionally, LLMs can be computationally expensive to train, which can make them impractical for some applications.

Despite these challenges, LLMs have the potential to significantly improve the performance of recommendation systems, especially for new users. As LLMs become more powerful and efficient, they are likely to play an increasingly important role in recommendation systems.

Hosted by

Supported by

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}