Prakhar Srivastava

@prakharcode

Pay Some Attention using Keras!

Submitted Aug 26, 2017

PAY SOME ATTENTION!

In this talk, the major focus will be on the newly developed attention mechanism in the encoder-decoder model.

Attention is a very novel mathematical tool that was developed to come over a major shortcoming of encoder-decoder models, MEMORY!

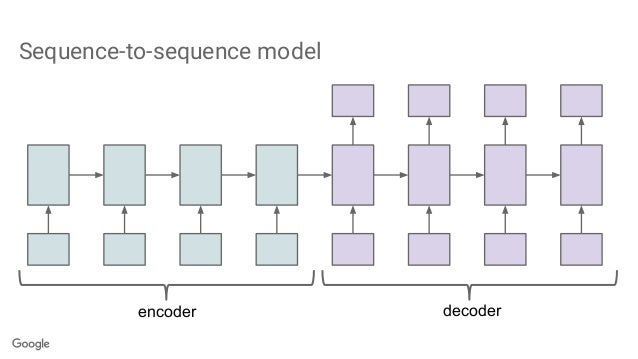

An Encoder-decoder model is a special type of architecture in which any deep neural net is used to encode a raw data into a fixed representation of lower dimension hence it is called ENCODER, this fixed representation is later used by another model, DECODER, to represent the data from encoder model into some other form.

This is a general layout of an encoder-decoder model.

But this model was not able to convert or encode data of very large parameter or sequence the major reason of that is the fixed representation part. This forces the model to overlook some necessary details.

The bottleneck layer

The bottleneck layer

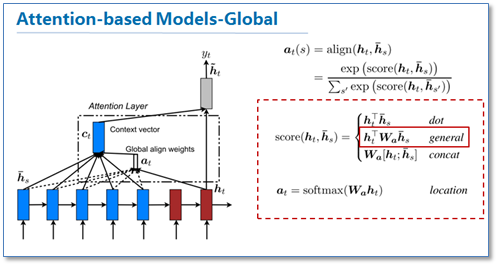

To solve this problem comes, Attention, a mathematic technique that lets decoder to overcome the problem of fixed representation and thus helps in many tasks further.

The talk will also focus on the mathematical fundamentals of this mechanism.

Brief intro to topics such as early and late attention and soft and hard attention.

A live demo of Visual Question Answer techniques and outro to specific tasks.

Outline

This talk will cover up the fundamentals of the attention technique in neural networks with an implementation in python and will make audience know about the advancements which are the direct result of this technique

Break-up:

- Intro to Rnns

- Lstms

- Sequence2sequence architecture

- Problem in seq2seq, rise of attention

- Attention explanation

- Coding an attention model in Keras

- Use cases

- Outro

Requirements

Keras installed

Speaker bio

I’m a computer science, junior, having a through understanding and great interest in deep learning. I’ve mentored many of my colleagues and friends at GSS tech solutions and IEEE MSIT on these vast topics. Being a part of Stanford Scholar initiative was also a great experience where I represented two papers and was a direct responsible individual of an international group under it. I’ve done 17 odd courses on the topic of Deep Learning covering the very best practices involved in it and it’ll be a great pleasure to work on many new projects like these.

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}