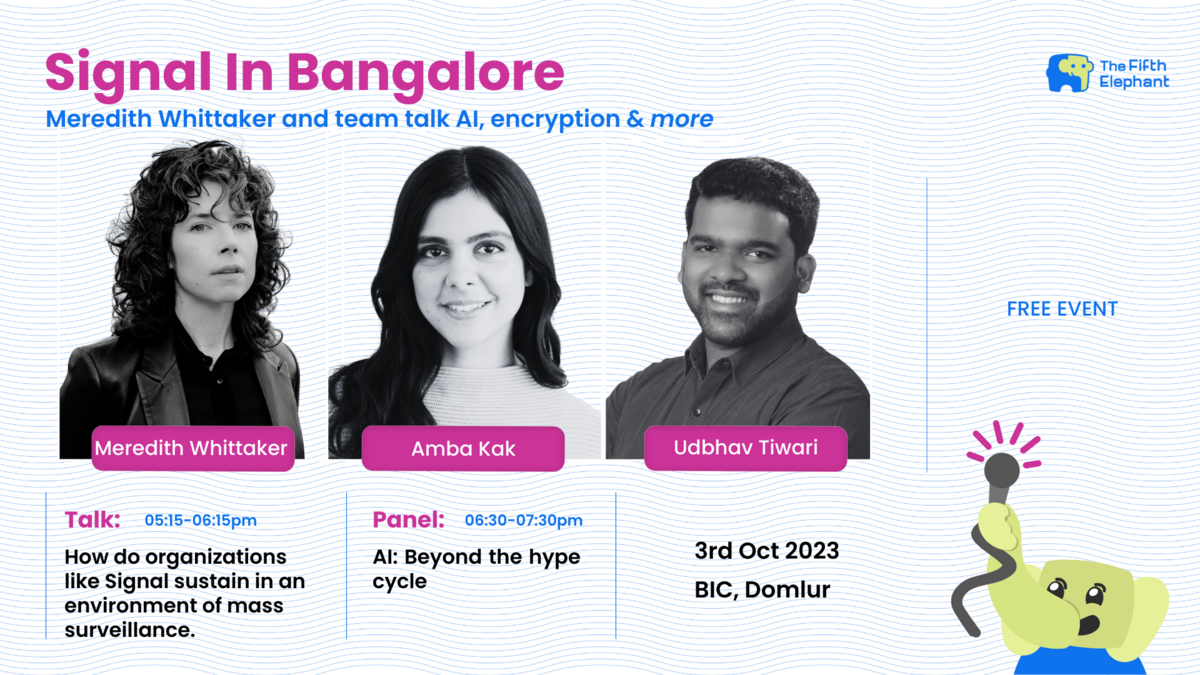

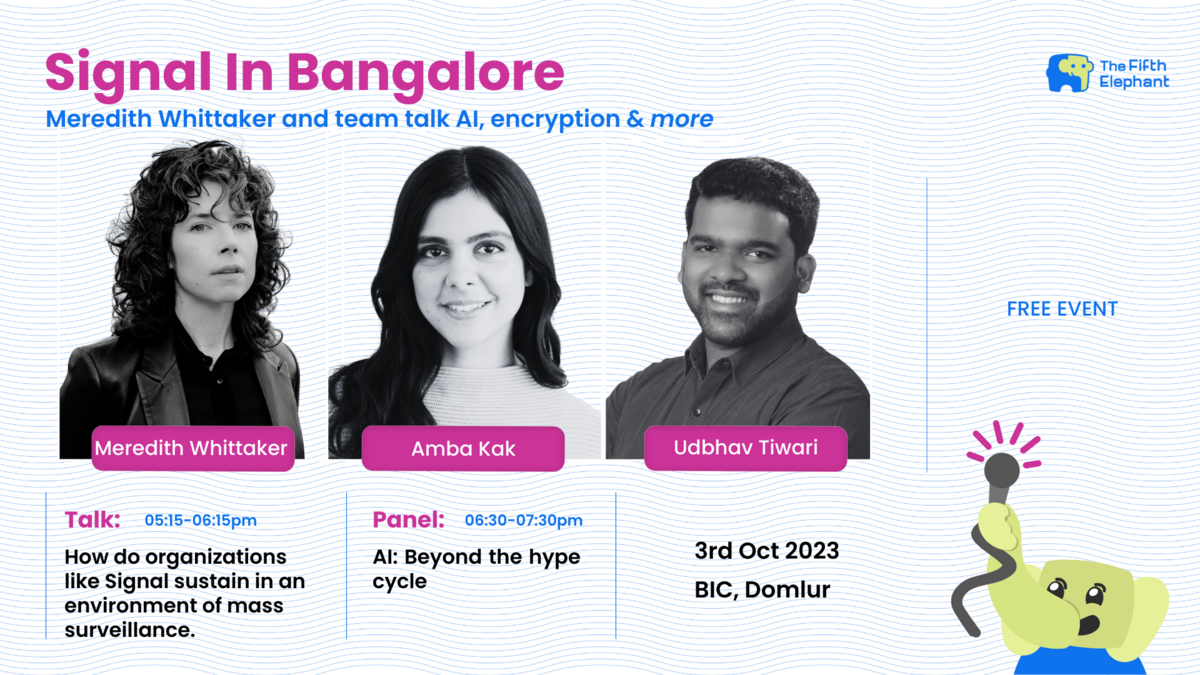

Signal in Bangalore

Signal Foundation's President - Meredith Whittaker - and the Signal team talks about AI, encryption and more.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Signal Foundation's President - Meredith Whittaker - and the Signal team talks about AI, encryption and more.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Anwesha Sen

@anwesha25

Submitted Oct 12, 2023

On 3rd October 2023, a public event was hosted in collaboration with the Signal Foundation, The Fifth Elephant and Bangalore International Centre (BIC). The event consisted of two sessions. The following is a summary for the first session, which was a talk by Meredith Whittaker, President of Signal Foundation, moderated by Kiran Jonnalagadda, co-founder of Hasgeek.

Signal is a private messaging app, built with end-to-end encryption to ensure that only the users that are part of a conversation have access to its contents. The Signal team is working to bring back the norm of private communication that existed before the development, and then commercialization, of networked infrastructure in the 90s. This led to the surveillance business model where data is monetized for advertising and AI. This is the engine of the tech economy. However, Signal does not participate in this surveillance business model and does not collect, let alone monetize, user data. So, how does Signal ensure encryption and sustain in such a tech economy?

Trevor Perrin and Moxie Marlinspike had developed the Signal Protocol in 2013 which is used for message encryption across different messaging apps such as WhatsApp, in addition to Signal. This ensures that even those with access to Signal’s servers will not be able to decipher messages. As a result, Signal themselves do not have access to user communications. On top of this, they have also developed novel cryptographic techniques to protect user’s metadata, i.e. their profile name, profile photo, who they are talking to, etc. This is unique to Signal.

Privacy is about power and power relations. One of the one ways in which those who have power, ensure and secure their power is through developing an advantage in information asymmetry. So, the more information they have about those who they govern or oppress, the more they can control them. Privacy is fundamental to the ability to participate in a meaningful democracy, to organize, to have an independent union, and to converse freely. With more privacy and private communications, there is a better chance of shaping a livable world rather than a world in which a handful of corporations have an inordinate power over lives and institutions across the globe through the surveillance capabilities and infrastructures they have developed.

Signal is a not-for-profit organization and has to have a charitable aim. This requires them to follow certain transparency protocols. They also cannot receive investments in the classic venture capitalist sense, and in the case that they are acquired, the executives and the board would have to reinvest the money in charitable causes.

These are some of the key barriers, or key protections that allow them to continue their mission of providing private communications. This is particularly important in the tech industry because the barrier that they are protecting against is the primary business model in tech, i.e. monetizing surveillance. This allows them to not compromise on their privacy focus just because surveillance is more profitable.

Signal is donation based and had received a substantial investment from the co-founder of WhatsApp Brian Acton, who was disappointed in how WhatsApp evolved after being acquired by Meta and became less privacy-friendly. This led him to invest in Signal which allowed them to work on developing a more sustainable revenue model that is outside of the surveillance business model.

Decentralization can be incredibly insecure and often does not promote the kind of collaborative consensus that is required for encryption, since encryption needs to operate on every end. Signal has a very human centered approach and doesn’t want to leave a section of users vulnerable because the people hosting their servers are unavailable. A decentralized infrastructure would also cost Signal hundreds of millions of dollars a year. Moreover, they cannot rely on volunteer labor and need to ensure that they have the same robust coverage in every part of the world. Using a decentralized protocol that lets anyone join and participate does not ensure decentralized power.

The end-to-end encryption protocols used at Signal are open source and have reproducible builds on Android. One can build an APK that is the same as the build that they download from the Play Store, if people want to verify that. But since everything is end-to-end encrypted, there is not very much that the service can do that would be malicious. So a malicious server is not a threat model for Signal in the same way that it might be for other messaging apps where the server is the client and everything on the server is plaintext.

At https://signal.org/bigbrother/ one can view the requests that Signal has received for access to data from governments. The amount of information that they have been able to turn over is extraordinarily limited to when somebody registered for the service and the last time they connected to the service. Signal, as well as their hosts, can only view encrypted data which cannot be deciphered.

Signal’s position has always been to never implement a backdoor. Encryption either works for everyone, or it’s broken for everyone. If it came down to the choice between being forced to implement a backdoor or leave, they would leave after trying alternatives to continue providing their service, such as by using proxy servers and other circumvention techniques.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Hosted by

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}