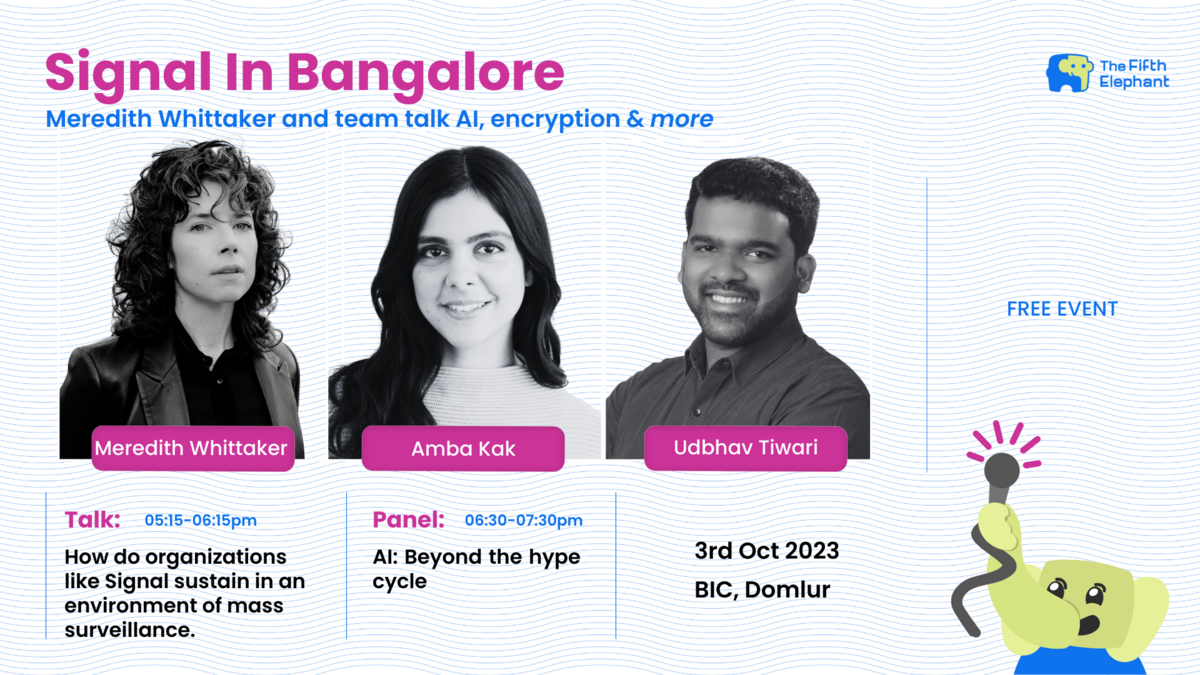

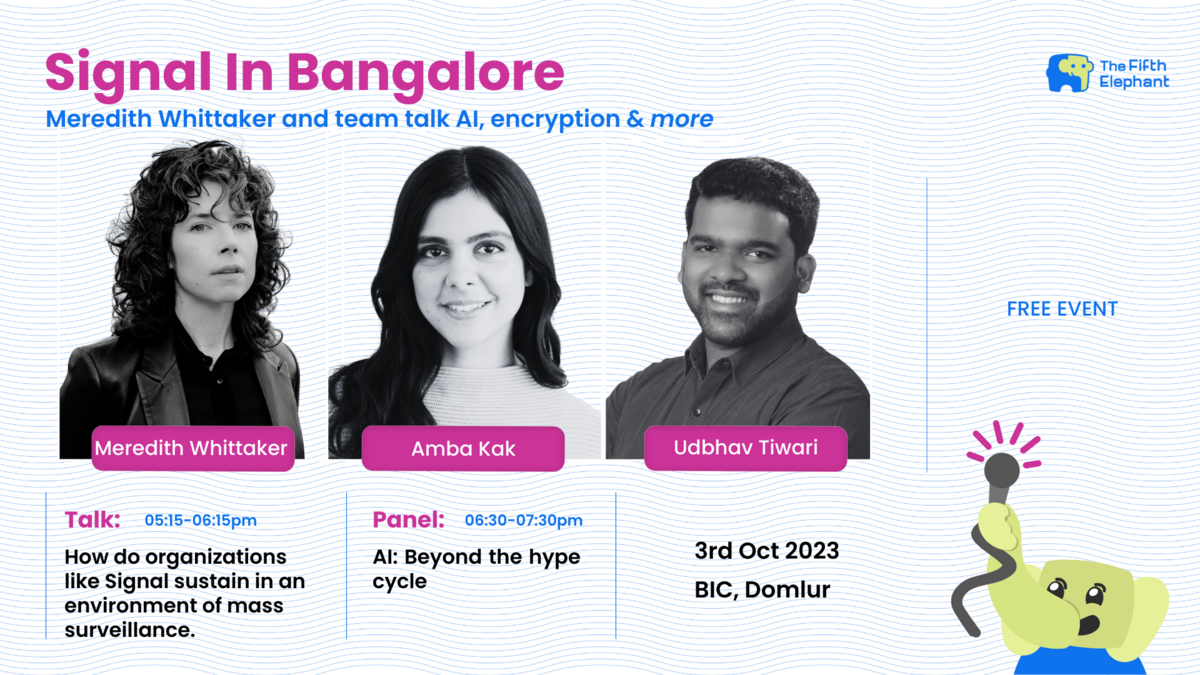

Signal in Bangalore

Signal Foundation's President - Meredith Whittaker - and the Signal team talks about AI, encryption and more.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Signal Foundation's President - Meredith Whittaker - and the Signal team talks about AI, encryption and more.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Anwesha Sen

@anwesha25

Submitted Oct 12, 2023

On 3rd October 2023, a public event was hosted in collaboration with the Signal Foundation, The Fifth Elephant and Bangalore International Centre (BIC). The event consisted of two sessions. The following is a summary for the second session, which was a discussion between Meredith Whittaker (President of Signal Foundation), Udbhav Tiwari (Head of Global Product Policy at Mozilla), and Amba Kak (Executive Director at AI Now Institute).

AI is more of a marketing term than a term of art, and over 70 years in history, it’s been applied to a wide and disparate variety of technical approaches. Currently, what is being marketed as AI are data intensive and compute intensive probabilistic models. The data and compute are the concentrated resources at the heart of the surveillance business model that are currently in the hands of a few large companies, who are the only ones that can afford the resources to create these large scale models from development through deployment. The term AI is a signifier that carries a lot of weight, brings a lot of media, and moves a lot of philanthropic capital, whether or not it has a clear definition.

Signal: AI is exacerbating the surveillance business model in the tech industry, within which Signal exists. It is calling for more and more data on everyone, producing surveillance, and is fundamentally a surveillance technology. It makes determinations about people, communities, politics, etc. and those determinations themselves become data. Large AI systems are calling for more surveillance on aspects of people’s lives to collect data which is numerated, put into databases, and fed into these systems. Signal is the world’s largest truly private messaging app and does not collect data. It exists as a counter force against the surveillance economy and the surveillance capacities of these increasingly large AI systems.

Mozilla: Mozilla has recently started an entity called mozilla.ai which is a research lab that will attempt to explore openness in AI. On the other hand, Mozilla Foundation has also worked under the broader umbrella of trustworthy AI regarding bias, discrimination, etc.

AI Now Institute: In the policy space, one thing that the AI Now Institute is doing is to replace the term “AI” with automated decision systems (ADS) as it moves attention away from these magical, potentially intelligent systems, to systems that are making decisions about allocating resources, curating people’s social and economic lives, etc. This term has successfully gained quite a lot of currency in policy conversations, particularly in the US. Another aspect of policy in AI is the claim that there is a blank regulatory slate for new AI technologies that are being developed. Considering the various components of AI like data, compute, and certain kinds of algorithmic and statistical models, there are policies regulating all of these, such as data privacy laws, competition law, etc. which are then also AI policies. Hence, the claim that there are no regulations is false.

The term AI is wrapped up in a lot of mythologizing where the marketing narrative is that of something path-breaking and achieving human-like intelligence. This is selling the technology that can only be developed and deployed by a handful of corporations, largely based in the US and China. Governments and corporations also want this technology on their side to figure out how to use that technology to control their workers, populations, and to otherwise benefit themselves.

As for regulations, there is a trend where people in charge of AI companies are moving from being against regulation because that would stifle innovation to being for high levels of regulations because this technology is dangerous. The simplified analysis behind this trend is that these companies want to create regulatory moats around themselves and want to make it easier for them to create these products and services, even if the price for this is something as harsh as licensing. The traditional way in which technology has either been kept in check and has been made a communal resource, are ideas like open source. In open source, people create code that other people can use, understand, and then deploy. This very model is also a threat to the idea of these companies having the sole ability to create or to perform generative AI functions, and they view this as a threat. While open source may not fix the problems with AI, it offers a different lens to it that is not complete corporate control.

The conversation around AI regulations is also one that is highly securitized. One version of this argument is that promoting accountability, regulations, and antitrust interventions threaten national security interests by slowing down innovation and development, whereas the other version is that large language models (LLMs) may end up creating bioweapons. Both arguments inflate reality out of proportion and take away from the actual issues at hand, which is that accountable and less concentrated AI systems are what are necessary for national security.

There is another spectrum when it comes to open source AI. On one hand there is the fear that open source AI would lead to bad actors getting easy access to a potentially dangerous technology, and on the other hand, open source AI will democratize everything. The fundamental issues here are that AI has not even been properly defined, let alone open source AI. This leads to ambiguities around what open source AI really is. There is a huge barrier to start creating AI models as it requires huge amounts of training data, compute, labor, and other resources, which is antithetical to “openness”.

However, what is currently seen as open AI, i.e. where one can view the model’s code, has certain benefits, such as it allows for transparency, independent verification of the code, and checking it for various harms. It also enables others in the ecosystem to engage with the technology in a way that expands the paradigm that companies would traditionally be comfortable with. The question of liability comes to the forefront here which is something that product liability regulation has also not dealt with. As for open source software, one’s license says whether or not they are liable but the same does not exist if someone made minor tweaks to an open AI model.

Oct 2023

2 Mon

3 Tue 05:00 PM – 07:30 PM IST

4 Wed

5 Thu

6 Fri

7 Sat

8 Sun

Hosted by

{{ gettext('Login to leave a comment') }}

{{ gettext('Post a comment…') }}{{ errorMsg }}

{{ gettext('No comments posted yet') }}