Language models are few shot learners

Building NLP applications by prompting LLMs

Feb 2024

5 Mon

6 Tue

7 Wed

8 Thu

9 Fri 06:15 PM – 07:30 PM IST

10 Sat

11 Sun

Feb 2024

5 Mon

6 Tue

7 Wed

8 Thu

9 Fri 06:15 PM – 07:30 PM IST

10 Sat

11 Sun

Pinned update

9th March - The Fifth Elephant Papers Discussion on stock markets and rich ML models This update is for participants only

Language Models are few short learners is an important paper in the space of GenerativeAI and Natural Language Processing. It introduced GPT-3 and showed the capability of large language models to generalize as task-agnostic learners.

The paper sowed the seeds for building NLP applications by prompting large language models with zero-shot, one-shot, and few-shot learning prompts. This was a huge advancement from task-specific modeling and also closer to how the human brain works by applying past learning to new data.

GPT-3 used the similar but scaled-up(100x) model architecture as GPT-2 except for the use of Sparse Attention (introduced in the Sparse Transformer paper).

The following discussion endeavors to provide a comprehensive understanding of GPT-3 by addressing various facets.

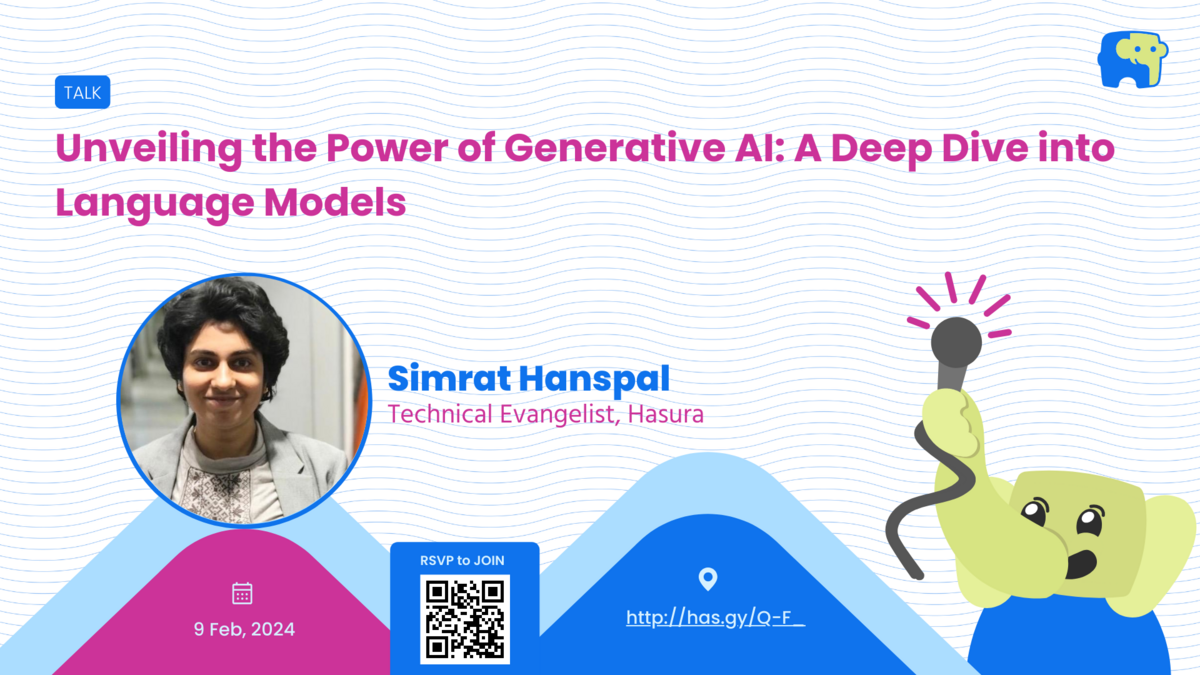

Simrat Hanspal has a career spanning over a decade in the AI and ML space, specializing in Natural Language Processing (NLP).

Simrat is currently spearheading AI product strategy at Hasura. She has previously led AI teams at renowned organizations such as VMware, FI Money, and Nirvana Insurance.

This is an in-person paper reading session. RSVP to be notified about the venue.

The Fifth Elephant member - Bharat Shetty Barkur - is the curator of the paper discussions.

Bharat has worked across different organizations such as IBM India Software Labs, Aruba Networks, Fybr, Concerto HealthAI, and Airtel Labs. He has worked on products and platforms across diverse verticals such as retail, IoT, chat and voice bots, edtech, and healthcare leveraging AI, Machine Learning, NLP, and software engineering. His interests lie in AI, NLP research, and accessibility.

The goal is for the community to understand popular papers in Generative AI, DL, and ML domains. Bharat and other co-curators seek to put together papers that will benefit the community, and organize reading and learning sessions driven by experts and curious folks in GenerativeAI, Deep Learning, and Machine Learning.

The paper discussions will be conducted every month - online and in person.

The Fifth Elephant is a community funded organization. If you like the work that The Fifth Elephant does and want to support meet-ups and activities - online and in-person - contribute by picking up a membership

For inquiries, leave a comment or call The Fifth Elephant at +91-7676332020.

Hasura

3rd Floor, Building No. 37/38, 80 Feet Rd, 3rd Block Koramangala,

1A Block, SBI Colony, Koramangala, Bengaluru, Karnataka 560034

Hosted by